edge data center

What is an edge data center?

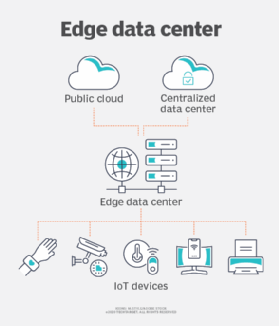

An edge data center is a small data center that is located close to the edge of a network. It provides the same device found in traditional data centers but is contained in a smaller footprint, closer to end users and devices. Edge data centers can deliver cached content and cloud computing resources to these devices. The concept works off edge computing, which is a distributed IT architecture where client data is processed as close to the originating source as possible. Because the smaller data centers are positioned close to the end users, they are used to deliver fast services with minimal latency.

In an edge computing architecture, time-sensitive data may be processed at the point of origin by an intermediary server that is located in close geographical proximity to the client. The point is to provide the quickest content delivery to an end device that may need it, with as little latency as possible. Data that is less time-sensitive can be sent to a larger data center for historical analysis, big data analytics and long-term storage. Edge data centers work off of the same concept, except instead of just having one intermediary server in close geographical proximity to the client, it's a small data center -- that can be as small as a box. Even though it is not a new concept, edge data center is still a relatively new term.

The major benefit of an edge data center is the quick delivery of services with minimal latency, thanks to the use of edge caching. Latency may be a big issue for organizations that have to work with the internet of things (IoT), big data, cloud and streaming services. Edge data centers can be used to provide high performance with low levels of latency to end users, making for a better user experience.

Typically, edge data centers will connect to a larger, central data center or multiple other edge data centers. Data is processed as close to the end user as possible, while less integral or time-centric data can be sent to a central data center for processing. This allows an organization to reduce latency.

5G and other edge data center use cases

Edge data centers can be used by organizations that work with subjects such as the following:

- 5G. A decentralized cell network made of edge data centers can help provide low latency for 5G density.

- Telecommunications companies. With cell-tower edge data centers, telecom companies can get better proximity to end users by connecting mobile phones and wireless sensors.

- IoT. Edge data centers can be used for data generated by IoT devices. An edge data center would be used if data generated by devices needs more processing but is also too time-sensitive to be sent to a centralized server.

- Healthcare. Some medical equipment, such as those used for robotic surgeries, would require extremely low latency and network consistency, of which, edge data centers can provide.

- Autonomous vehicles. Edge data centers can be used to help collect, process and share data between vehicles and other networks, which also relies on low latency. A network of edge data centers can be used to collect data for auto manufacturers and emergency response services.

- Smart factories. Edge data centers can be used for machine predictive maintenance, as well as predictive quality management. It can also be used for efficiency regarding robotics used within inventory management.

Characteristics of edge data centers

Most edge computers share similar key characteristics, including the following:

- Location. Edge data centers will generally be placed near whichever end devices they are networked to.

- Size. Edge data centers will have a much smaller footprint, while having the same components as a traditional data center.

- Type of data. Edge data centers will typically house mission-critical data that needs to be low latency.

- Deployment. An edge data center may be one in a network of other edge data centers, or be connected to a larger, central data center.

Some edge data centers may also use micro data centers (MDCs). An MDC is a relatively small, modular system that serves smaller businesses or provides additional resources for an enterprise. An MDC is likely to contain less than 10 servers and less than 100 virtual machines within a single 19-inch box. MDCs will feature built-in security and cooling systems, along with flood and fire protection. MDCs are often used for edge computing.

An organization may choose to use an edge data center by deploying MDCs for scalability. For example, a cell/radio site may use micro modular data centers. MDCs and edge data centers shouldn't be conflated, however. MDCs should be thought of more as a component that helps create an edge data center.

How edge data centers work

At a basic level, edge data centers will work as a connection between two networks. The edge data center will be located closer to whatever machine is making a request, inside an internet exchange point. The internet exchange point is a physical location where multiple network carriers and service providers will connect. This will allow a quick flow of traffic between networks. The edge data center itself might be made of an MDC -- which will contain the devices a traditional data center has, just in a smaller footprint. By being able to move processing requests like JavaScript files or HTML closer to the requesting machine, the amount of time needed to deliver that data back will decrease.

Even though an edge data center can provide multiple services on its own, it will typically connect back to a larger data center. This data center can provide more cloud resources and centralized data processing. That larger data center can also be used to connect to multiple edge data centers. Each edge data center will store and cache data specific to the edge devices they're located close to.

Some edge data centers may also be able to be managed remotely, as with telecommunications companies with cell-tower edge data centers, for example.

Types of edge data centers

There are two main types of edge data centers. The first is a small facility that is used to serve secondary locations by a service or colocation provider, and the second is defined as a modularized site placed close to the edge of an organization's own main network. With many organizations, operating across multiple geographies, each location might require access to core company data and systems. Edge data centers based around modularized systems are useful here. A large data center can also be built from a number of smaller modules.

Edge computing locations can be divided by network-based sites or establishment-based sites. Network-based sites are generally tier-based in architecture, made specifically to support edge data centers. This approach is favored for its high availability and uptime. For example, network-based sites are used in instances such as with radio sites, where the goal is to have the site be one hop away from mobile and non-mobile users, which should achieve low latency.

Establishment-based edge data centers can use tiered architectures, but for off-premises configurations. These are typically driven by private networks. They can orchestrate transport needs between access and aggregation sites, as well as collect data for processing -- separate from core data processing and storage.

Edge computing vendors

There are a number of edge computing vendors that are growing to offer edge data center services. In a way, these organizations offer infrastructure as a service, in that the edge data centers can be considered as infrastructure, and that it is being offered as a service. Some example vendors are the following:

- Vapor IO.

- EdgeConneX.

- 365 Data Centers.

Different organizations can become customers of one of these vendors for an edge data center. For example, Cloudflare -- a content delivery network organization -- has become a customer of EdgeConneX.

Impact on existing data center models

With the current and future expansion of 5G, edge data centers may become more popular and widely used, but that doesn't spell an end for existing data center models. Because edge data centers rely on larger data centers, if the amount of edge data centers increases, then the use of larger data centers should also increase. And with increases in edge IoT devices, the amount of data to process, in general, will require traditional data centers.

Edge computing vs. fog computing

Fog computing is a similar term and concept to edge computing. Fog computing is a term created by Cisco in 2014 in order to describe decentralizing computing infrastructure. The term is also closely related to the internet of things. The key difference between edge computing and fog computing is that fog computing includes the placement of nodes between the cloud and edge devices. This means that instead of being placed as close to the edge devices as possible, fog computing processes data within an IoT gateway, which is located within the local area network (LAN). Fog computing allows the LAN hardware to be situated farther away physically from the end-user devices. Both edge computing and fog computing are similar in terms of the fact that they both push some data processing farther from centralized data centers and instead, closer to the end-user devices -- but edge computing typically takes place in a closer physical proximity.